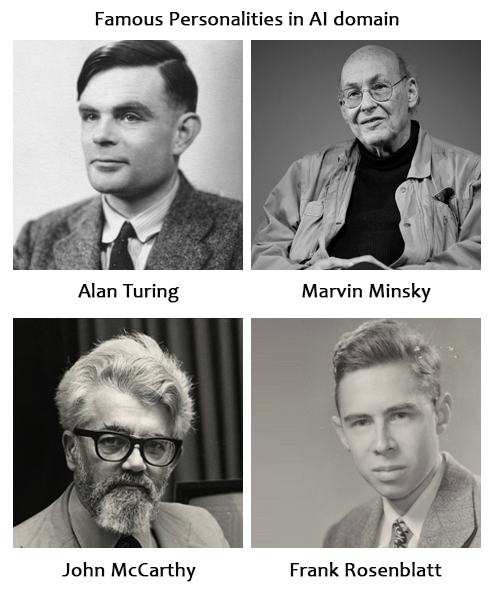

The fascination of humans to make powerful and intelligent machines has brought about a major technological revolution in the past decade. The persistence and hard work of many scientists, researchers and mathematicians over the years has resulted in a new era of intelligent machines or Artificial Intelligence. It all began when in 1950, Alan Turing, the father of theoretical science and artificial intelligence, proposed a test, now known as the ‘Turing Test’. In his seminal paper “Computing Machinery and Intelligence”, he proposed a simple test to test the ‘intelligence’ of machines. He posed a simple question “Are there imaginable digital computers which would do well in the imitation game?”. According to the Turing test, a machine was classified as intelligent if it could carry on a conversation that was indistinguishable from a conversation with a human being.

The birth of AI:

A landmark moment came in the history of AI with the Dartmouth Conference in 1956, which was organized by some eminent personalities in the field of computer science such as Marvin Minsky, John McCarthy, Claude Shannon (Father of Information Theory) and Nathan Rochester (IBM 701 designer). The term, “Artificial Intelligence” was first coined during this conference by John McCarthy. The conference was organized with the aim of identifying various aspects of learning and intelligence that can be simulated using a machine. It served as one of the biggest catalyst, fuelling major research and innovation in this domain.

The ups and down in the AI community:

The success of the Dartmouth Conference resulted in the development of many breakthroughs in the area of artificial intelligence such as the ‘General Problem Solver’ by Newell and Simon which was used to solve many problems of logic and geometry. It also fuelled further interest in natural language understanding and robotics with several government and other funding agencies providing the necessary grants. Frank Rosenblatt’s perceptron model fuelled research in the area of ‘connectionist’ systems or more commonly known as Artificial Neural Networks (ANNs). These days, ANNs form the backbone of many recent innovations such as machine translator, voice recognition system etc. However, following the initial euphoria, AI began to lose its sheen, with innovations taking a backseat. A lot of this has been attributed to the limited computing facilities and the presence of non-scalable and intractable problems. For example, many vision and natural language applications require huge amount of data which in the early 1970s were impossible to store on the databases besides accessing them and learning from them was even harder. On the hindsight, some of these concerns did turn out to be important factors leading to the present revolution in AI due to increased computing power and availability of huge amount of data.

In the 1980s, there was a rise in programs known as expert systems. These systems answer questions and solve problems about a specific domain of knowledge, using logical rules derived from the knowledge of experts. The earliest examples were developed by Edward Feigenbaum and his students. Dendral was one of the earliest examples of expert system, developed in 1965. It could identify compounds from spectrometer readings. MYCIN, developed in 1972, was an expert system that could diagnose infectious blood diseases. In the 1989, chess playing programs such as Deep Thought and Hitech were able to defeat chess masters. Hopfield nets, based on artificial neural nets and developed by John Hopfield in 1982 re-popularized connectionist and ANN. Learning algorithms such as Backpropagation provided a formal ‘training’ method for ANNs. These discoveries in the 80s paved way for their commercial utilization in Optical Character Recognition (OCR) and Speech Recognition in the 90s.

Despite the success of earlier expert systems, in the late 1980s and early 1990s, the AI community witnessed its second slump. Expert systems remained domain specific and required costly maintenance. They were too sensitive to the variations in the input. Other projects also suffered setbacks. However, from the middle 1990s to 2011, AI community witnessed some good progress in the field, possibly due to better hardware and computing capabilities.

In 1997, Deep Blue became the first AI program to beat Gary Kasparov, the then reigning chess champion. In 2005-2007, autonomous driving gained impetus with Stanford and CMU winning DARPA’s various challenges. Their autonomous vehicles and the research around it paved way for the next big thing in AI – automation. In 2011, IBM developed a question answering system WATSON which would eventually be used in the medical community. Some key technologies that were instrumental in the success of ‘pre-deep’ era were: Probability and Decision theory, Bayesian Network, Hidden Markov Model, Information theory, Stochastic Modeling, Optimization, Evolutionary methods etc. These methods were used to solve some pressing problems in the field of data mining, search engines, industrial robotics, banks, speech recognition, medical science etc.

Going ‘deeper’:

Going ‘deeper’:

The AI community got its long-awaited recognition in the years 2010-2012, when research around ‘Deep Learning’ started gaining momentum. Deep learning forms a subset of the Machine learning methods, which in turn is a subset of the much broader set of AI. Deep learning is essentially a class of machine learning algorithms consisting of many layers (hence, deep) of artificial neurons. The deep neural nets are designed to learn useful representations of the data, often required in huge numbers.

In the year 2012, Alex Krizhevsky et al demonstrated use of many layers of neural nets for the task of object recognition on the Imagenet challenge. They were able to reduce the error rate on that task by a significant margin thus ushering in a new era of AI. The tremendous progress in the field of AI is largely attributed to the availability of faster GPU (Graphics Processing Unit) and abundant amount of data that is used to ‘train’ the deep neural nets for various tasks such as classification, regression, game-playing etc. Autonomous driving, medical analysis/drug discovery, Computer Vision, Speech and text recognition are some of the areas that have greatly benefitted with the advances in AI and the coming years show huge potential in this area.

Source: Wikipedia, Google Images

Very good insight from evolution of expert systems to AI them further on ML, deep learning and its application on the matters that affect the human society for example, in Autonomous driving, agriculture yield identification, insurance and medical fields.

Surely more sophisticated object recognition algorithms will be experienced and evolved over the time to have more expert help with ML. Thank you for the information.